Improving education and creating better doctors

by Sal Nudo / Mar 13, 2018

New research reveals how on-the-spot assessment, feedback, and scalable metrics transform learning

The real-world results of a two-year National Science Foundation-funded study are starting to emerge through an analytics tool called Common Ground Scholar, which aims to end the traditional division between learning and assessment.

The project has been a collaboration among several professors on campus, including Bill Cope and Mary Kalantzis in the College of Education, as well as ChengXiang Zhai (Department of Computer Science) and Duncan Ferguson (College of Veterinary Medicine).

Cope, a professor in the Department of Education Policy, Organization & Leadership, is the principal investigator on the “Assessing Complex Epistemic Performance in Online Learning Environments” project and the lead designer of CGScholar, which offers structured feedback from students’ peers as well as computer feedback. In Cope’s view, this new way of learning could bring about the end of the traditional division between instruction and assessment.

“This learning environment creates a community of learners rather than just individual learners, and learners have more control over learning outcomes, with a very precise view of progress at any one time, where work needs to be done,” he said.

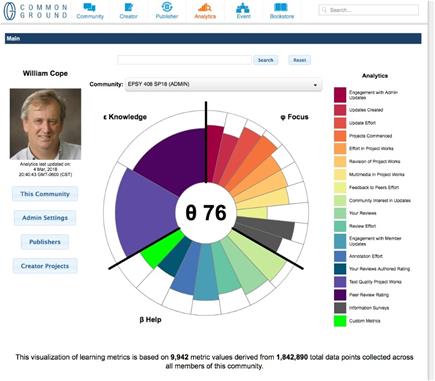

Cope referred to a colorfully charted Aster plot that revealed the progress assessment of 97 students who had mostly completed an eight-week educational psychology course. The chart is the result of almost two million data points and nearly 10,000 pieces of meaningful feedback that have contributed constructively to learning during the class, including the effort students put in and the value of the peer collaboration they have given and received.

Cope referred to a colorfully charted Aster plot that revealed the progress assessment of 97 students who had mostly completed an eight-week educational psychology course. The chart is the result of almost two million data points and nearly 10,000 pieces of meaningful feedback that have contributed constructively to learning during the class, including the effort students put in and the value of the peer collaboration they have given and received.

Cope said the students have continuous access to their own Aster plots, making progress and assessment more transparent and affording them more responsibility for outcomes.

Maureen McMichael, an associate professor in the Department of Veterinary Clinical Medicine, affirms the educational value of CG Scholar. She is participating in an online course taught by Cope and uses her own Aster plot to view personal results. Gone are the days, McMichael said, when students have no idea where they stand in a course.

“It tells you exactly how you’re doing in every way, and it’s visual, so it’s not this horrible list,” McMichael said. “It’s a beautiful visual. It tells you exactly how you’re doing in every area.”

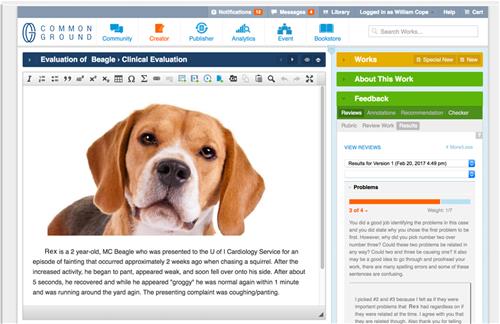

The NSF study is centered on medical and veterinary medicine students who write analyses of specific cases of sick people and animals, as well as organize evidence and make diagnoses. Through CGScholar, peers offer their views and revisions about the cases, providing learners with a combination of human and machine feedback. The upshot is that students put together their own case studies rather than having information fed to them.

McMichael gave the example of veterinary students who had been assigned a case centered on heart disease in a lemur, a topic they knew little about.

“Each vet student gets their case reviewed by three other vet students, so they’re learning how to write, but they’re also learning how to critique, as well as to do critical clinical analysis,” she said. “And when they critique, they’re learning more about what maybe they should have written, and they go back and they edit. So, it’s in this cycle where it just gets better and better.”

“Each vet student gets their case reviewed by three other vet students, so they’re learning how to write, but they’re also learning how to critique, as well as to do critical clinical analysis,” she said. “And when they critique, they’re learning more about what maybe they should have written, and they go back and they edit. So, it’s in this cycle where it just gets better and better.”

Cope said the ultimate goal of the program is to produce better doctors by increasing their exposure to real-life situations in the medical field and peer reviews. In his view, this surpasses being evaluated through rote memorization or taking exams.

Aspiring veterinarians dream of becoming clinicians, according to Ferguson, a professor emeritus of the College of Veterinary Medicine and co-principal investigator on the project, and yet traditional veterinary training programs have delayed introducing students on how clinicians think. Ferguson feels that such training needs to start as soon as possible.

“We do bring them into clinical concepts by trying to use these exercises to link it to what they’re studying in what we call preclinical or basic sciences,” Ferguson said, “and it does force them to start thinking about some of the terminology that clinicians use and also how what they’re learning now has some relevance to their later clinical endeavors.”

From a computer science perspective, Professor Zhai said CGScholar, with its ability to offer highly scalable education, provides an opportunity to develop informative algorithms that compare revisions of a student’s work, analyze the changes, and provide assessment feedback on these to students and professors.

A new CGScholar feature, in fact, allows students to give feedback on the reviews they receive, which, according to Zhai, has generated a great deal of useful, unique data. From it, he said, “We can analyze student behavior and understand how students learn and then develop techniques that can automate some of the assessment.”

Cope, Ferguson, McMichael, and Zhai have been collaborating on the project with Matthew Allender (veterinary medicine) Jennifer Amos (bioengineering), Matthew Montebello, (artificial intelligence, University of Malta), and Duane Searsmith, who is a software developer in the College of Education.

Front row: Duane Searsmith, ChengXiang Zhai, Bill Cope, Mary Kalantzis, Matthew Montebello; back row: Maureen McMichael, Chase Geigle, Saar Kuzi, Naichen Zhao, Min Chen, Tabassum Amina, Olnancy Tzirides; on screen: Duncan Ferguson